I went to a very good conference at Elon University on Wednesday--the North Carolina Independent Schools and Universities Assessment Conference. One of the speakers was Jean Yerian from Virginia Commonwealth University. They have developed their effectiveness planning management system into a commercial product that she demonstrated for us. You can get more information at

www.weaveonline.net. I'm not sure what the cost is, other than it's FTE-driven. The strengths of the system seem to be rich reporting features including and administrative overview that quickly shows compliance status. So if the English Department is slow about putting their plans, assessments, actions, or mission on the system, it shows up as Not Begun, In Progress, or Complete. They are planning to add a curriculum mapping feature that creates a matrix of courses and content per discipline. There is a comment box that allows notes about budgetary impact of activities and follow-up plans. As yet they do not have a document repository built into it, but I think that may be in the works too.

This product has many more features than

openIGOR does, and it's designed with a slightly different purpose in mind. The functionality of openIGOR starts with the file repository and builds up from there, whereas WEAVEonline goes in the other direction. A weakness of both systems currently is that they do not tie directly to evidence of student learning without extra work. The logical extension of each of these systems would be a link to a portfolio system. We currently have an electronic portfolio system, but it's not connected directly to assessment reporting yet. I hope to have that programming done this summer, though.

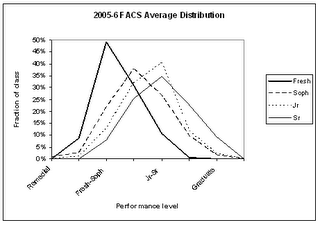

The data here aren't truly longitudinal--it's a composite of three classes to give a good sample size and show performance shifts over four years. A similar approach compares students based on their overall grade point averages. All students in these data sets were still attending as of Spring 2006, so survivorship issues are controlled for.

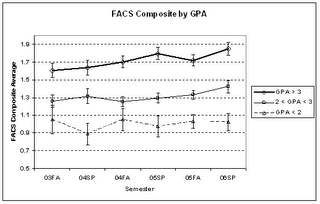

The data here aren't truly longitudinal--it's a composite of three classes to give a good sample size and show performance shifts over four years. A similar approach compares students based on their overall grade point averages. All students in these data sets were still attending as of Spring 2006, so survivorship issues are controlled for. Here you can see that the better students actually seem to be learning. The middle class of students learns more slowly, and the students with GPA < 2 don't seem to be improving at all. These studies and others seem to validate our approach. The holy grail of this investigation is to be able to pinpoint the contribution of individual courses to a student's skill improvement. I've worked on that quite a bit but have concluded that I don't have enough samples yet, and I haven't developed a sophisticated enough approach to the problem. Stay tuned.

Here you can see that the better students actually seem to be learning. The middle class of students learns more slowly, and the students with GPA < 2 don't seem to be improving at all. These studies and others seem to validate our approach. The holy grail of this investigation is to be able to pinpoint the contribution of individual courses to a student's skill improvement. I've worked on that quite a bit but have concluded that I don't have enough samples yet, and I haven't developed a sophisticated enough approach to the problem. Stay tuned.