Introduction

A common difficulty in regression modeling is to figure out which input variables should be included. One tool at our disposal is the added-variable plot. Given an existing model, the added-variable plot lets us visualize the improvement we would get by adding one more variable of our choosing. This idea is described nicely in A Modern Approach to Regression with R by Simon J. Sheather, pages 162-166.

A function for displaying add-variable curves can be found in R's car package, among other places. That package is the support software for An R Companion to Applied Regression, and has other useful functions for regression modeling.

Simulated Data

To illustrate the added-variable graphs, I'll use simulated data. Simulations are useful, because when we build the relationship between variables by hand, we know what the answer is when we start. Then we can check the actual answer versus the regression model.

library(tidyverse) library(broom) add_names <- function(x, .names){ colnames(x) <- .names return(x) } # create three variables that have correlations cm <- matrix( c( 1, .5, .6, .5, 1, .3, .6,.3, 1), nrow = 3, ncol = 3) sim_data <- MASS::mvrnorm(n = 1000, mu = c(0,0,0), Sigma = cm) %>% scale() %>% add_names(c("y","x","z")) %>% as_tibble() # check the variance properties var(sim_data) %>% round(2) y x z y 1.00 0.49 0.64 x 0.49 1.00 0.32 z 0.64 0.32 1.00

This code creates three variables: y (the output) and x,z (two inputs). They each have mean zero and variance 1, and--as you can see from the covariance matrix at the bottom--are each correlated with each other. Because of the scaling, the correlation coefficients between variables are the same as the covariances, so cor(x,y) = .49 in the sample data. Ideally it's .5, from the specification, but there's a bit of sampling error.

A Combined Model

One problem that bedevils regression analysis is that inputs can be correlated with each other, so the explanatory power of each in isolation may be quite good, but when we put them together in the model, they essentially provide the same information about the output--so the coefficients look weird. The coefficient may even have the "wrong" sign. This is not mistake; the output will always minimize squared error. Rather it's a consequence of the correlational structure of the inputs that confounds our intuition.

m_xyz <- lm(y ~ x + z, sim_data) summary(m_xyz) Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 6.226e-17 2.250e-02 0.00 1 x 3.145e-01 2.377e-02 13.23 <2e-16 *** z 5.362e-01 2.377e-02 22.56 <2e-16 *** --- Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 Residual standard error: 0.7115 on 997 degrees of freedom Multiple R-squared: 0.4948, Adjusted R-squared: 0.4938 F-statistic: 488.3 on 2 and 997 DF, p-value: < 2.2e-16

Because the means are all zero, the intercept is zero. The coefficient estimates are given by the formula \( \beta = r_{xy} s_x / s_y \) for simple two-variable models (e.g. y predicted by x alone), so in our case the individual betas would be cor(sim_data$x, sim_data$y) * sd(sim_data$y) / sd(sim_data$x) = .488. But it's less than that here, because we've added z as an explanatory variable, which is correlated with x.

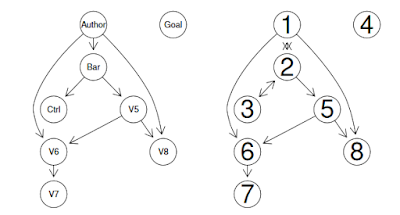

Added-Variable Plots

With the two-input model, we can now ask for add-variable plots from the car package with

car::avPlots(m_xyz)

The graph on the left starts with the model y ~ z and then figures out what the addition of x to this would add, to create y ~ x + z. The graph on the right does this in reverse, starting with y ~z and adding z. The slopes of the lines are the same as the regression coefficient that will result in the combined model for the given variable. So we want to see non-zero slopes. In this case, we can see that both of these inputs (x and z) are useful in explaining y.

Model Outputs

Since we are comparing several models, I'll show the summary results using the broom package.

# Models m_xy <- lm(y ~ x, sim_data) m_xz <- lm(z ~ x, sim_data) m_zy <- lm(y ~ z, sim_data) m_xyz <- lm(y ~ x + z, sim_data) # Assemble summary statistics m_stats <- map_df(list(m_xy,m_xz,m_zy,m_xyz),glance) m_stats$Model <- c("m_xy","m_xz","m_zy","m_xyz") m_stats$r <- sqrt(m_stats$r.squared) m_stats %>% select(Model,r, r.squared)

Model r r.squared <chr> <dbl> <dbl> 1 m_xy 0.487 0.237 2 m_xz 0.321 0.103 3 m_zy 0.637 0.406 4 m_xyz 0.703 0.495

I added the r column, which is the correlation between variables. You can check the covariance matrix above and verify that these are the same values found there (e.g. m_xy predicts y from x, and has r = .487, which corresponds to the second element of the first row of the covariance matrix (rounded to .49).

Discussion

There's a lot more to say about these graphs. The mathematical motivation is found in Sheather's book. In the next article I'll unpack the geometry behind the graphs and show why they still don't entirely solve our problem with co-linearity among predictors. Still, added-value graphs are a nice tool to have in the kit.