According to complexity theory, "random" means infinitely complex. Complexity is the size of a perfect description of, for example, a list of numbers. If we are given an infinitely long list of numbers like

1, 1, 1, 1, 1, ....it's easy to see that it has very low complexity. We can describe the sequence as "all ones." Similarly, the powers of two makes a low complexity sequence, or any other simple arithmetic sequence. We could create a more complicated computer program that tries to produce numbers that are as "mixed-up" as possible--this is what pseudo-random number generators do, but if we have access to the program (i.e. the description of the sequence), we could perfectly predict the numbers in the sequence. It's hard to call that random.

Truly random numbers (as far as we know) come from real-world phenomena like radioactive decay. You can have a certain amount of this so-called "entropy" for free from internet sources like Hotbits. I use such services for my artificial life experiments (insert maniacal laugh here). Real randomness is a valuable commodity, and I'm constantly running over my limit for what I can get for free from these sites. Here's a description from their site of where the numbers come from:

HotBits is an Internet resource that brings genuine random numbers, generated by a process fundamentally governed by the inherent uncertainty in the quantum mechanical laws of nature, directly to your computer in a variety of forms. HotBits are generated by timing successive pairs of radioactive decays detected by a Geiger-Müller tube interfaced to a computer.What would be involved if you wanted to predict a sequence of such numbers (which will come in binary as ones and zeros)? As far as we know, radioactive decay is not predictable from knowing the physical state of the system (see Bell's Theorem for more on such things).

Even in a mechanical system such as a spinning basket of ping-pong balls, like those used for selecting the winning numbers in lotteries, a complete description of the system that is sufficient to allow you to predict which balls will emerge to declare the winner would be a very long set of formulas and data. In other words, even if it's not infinitely complex, it's very, very complex.

But what if we want partial credit? This was the big idea that had half my brain working all day. What if we are content to predict some fraction of the sequence, and not every single output? (Like "lossy" compression of image files versus exact compressors for executables.) For example, if I flip a coin over and over, and I confidently "predict" for each flip that it will come up heads, I will be right about half the time (in fact, any predictor I use is going to be right half the time). So even with the simplest possible predictor, I can get 50% accuracy.

Imagine that we have an infinitely complex binary sequence S-INF that comes from some real source like radioactive decay. We write a program to do the following:

For the first 999,999 bits, we output a 1 each time. For the one-millionth bit we output the first S-INF bit, which is random. Then we repeat this process forever, with very long strings of 1s followed by a random one or zero. Call this sequence S-1It should be clear that a perfect predictor of S-1 is impossible with a finite program. It's still infinitely complex because of the interjection of bits from S-INF. But on the other hand, we can accurately predict the sequence a large portion of the time. We'll be wrong once in every two million bits, on average, if we just predict that the output will be 1 every time.

There is a big difference between randomness and predictability. If we take this another step, we could imagine making a picture of prediction-difficulty for a given sequence. An example from history may make this clearer.

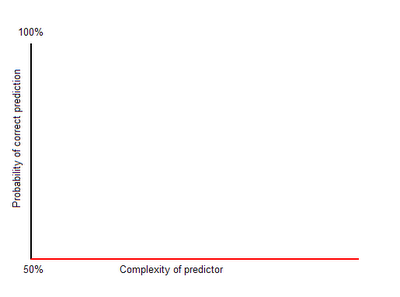

Before Galileo, many people presumably thought that heavy objects fell faster than lighter objects. This is a simple predictor of a physical system. It works fine with feathers and rocks, but it gives the wrong answer for rocks of different weights. Galileo and then Newton added more description (formulas, units, measurements) that allowed much better predictions. These turned out to be insufficient for very large scale cases, and Einstein added even more description (more math) to create a more complex way of predicting gravitational effects. We know that even relativity is incomplete, however, because it doesn't work on very small scales, so theorists are trying ideas like string theory to find an even more complex predictor that will work in more instances. This process of scientific discovery increases the complexity of the description and increases the accuracy of predictions as it does.Perfect prediction in the real world can be very, very complex, or even infinitely complex. That means that there isn't enough time or space to do the job perfectly. As someone else has noted (Arthur C. Clark?), some systems are so complex that the only way to "predict" what happens is to watch the system itself. But even very complex systems may be predictable with high probability, as we have seen. What is the relationship between the complexity of our best predictor and the probability of a correct prediction? This will depend on the system we are predicting--there are many possibilities. Below are some graphs to illustrate predictors of a binary sequence. Recall that a constant "predictor" can't do worse that 50%.

The optimistic case is pictured below. This is embodied in the philosophy of progress--as long as we keep working hard, creating more elaborate (and accurate) formulas, the results will come in the form of better and better predictors.

The worst case is shown below. No matter how hard we try, we can't do better than guessing. This is the case with radioactive decay (as far as anyone knows).

The graph below is more like the actual progress of science as a "punctuated equilibrium." There are increasingly large complexity deserts, where no improvement is seen. Compare the relatively few scientists that led to Newton's revolution or the efforts of Einstein and his collaborators to the massive undertaking that is string theory (and its competition, like loop quantum gravity).

Note that merely increasing the complexity of a predictor is easy. The hard part is figuring out how to increase prediction rates. You can always make a formula or description more complex, but by doing so it doesn't guarantee that the predictors are any better. Generally speaking, there is no computable (that is, systematic or deterministic) method for automatically finding optimal predictors for a given complexity level. You might think that you could just try every single program of a given complexity level and proceed by exhausting the possibilities, but you run into the Halting Problem. There are practical ways to tackle the problem though. This is a topic from the part of computer science called machine learning. A new tool that appeared this year from Cornell University is Eureqa, a program for finding formulas to fit patterns in data sets using an evolutionary approach.

Next time I will apply this idea to testing and outcomes assessment. It's very cool.

Great info here. Long time reader, first time poster....keep it up please!

ReplyDelete